Session 1.2.2 – VisualDx: Neural Networks and Convolutional Neural Networks

The Problem: Accurate Diagnosis of Rashes is Difficult

Clinicians often encounter diagnostic dilemmas where pattern recognition is key—for example, assessing a rash or lesion. Human interpretation varies by experience, and subtle visual differences matter. To assist clinical decision-making, automated tools—especially those based on deep learning—have emerged.

VisualDx: A Rash Diagnosis App

In this session, we will treat a tool like VisualDx as if it were powered by a convolutional neural network (CNN) for rash diagnosis. Understanding how CNNs work explains both their power and limitations in clinical use.

Learning Objectives

By the end of this session, you will be able to:

-

Explain the fundamental idea of neural networks — Describe how networks map inputs (e.g., pixels, features) to outputs by learning weights and activation functions.

-

Define overfitting and data needs — Explain why neural networks can learn complex relationships yet be prone to overfitting, and why they need large, representative datasets.

-

Explain how CNNs work — Describe how CNNs process images through convolutional filters, weight sharing, pooling, and hierarchical feature learning.

-

Understand why interpretability matters — Explain why interpretability is a critical issue for trust and safety in clinical AI.

1. The Fundamental Concept of Neural Networks

What Is a Neural Network?

Neural networks are computational models inspired by biological neural systems. They consist of:

- Nodes (neurons) that compute simple functions

- Weights on connections between nodes

- Activation functions that introduce non-linearity

Core idea: A neural network learns a mapping from input to output by adjusting weights to minimize prediction error.

Interactive Neural Network Demo

Hidden Node 1 Activation

Hidden Node 2 Activation

Input Layer

Weights: Input → Hidden

Weights: Hidden → Output

Activation Function

How Do Neural Networks Learn?

Learning happens through a process called back propogation:

- The network makes a prediction

- The error between predicted and true values is computed

- The error is propagated backward

- Weights are updated to reduce future error

The network doesn't "reason"; it learns statistical associations between patterns and labels.

2. Flexibility and Data Requirements

Why Neural Networks Are Powerful

Neural networks can capture nonlinear relationships and complex interactions between inputs and outputs that simpler models cannot.

This flexibility allows them to detect subtle patterns in complex data like images.

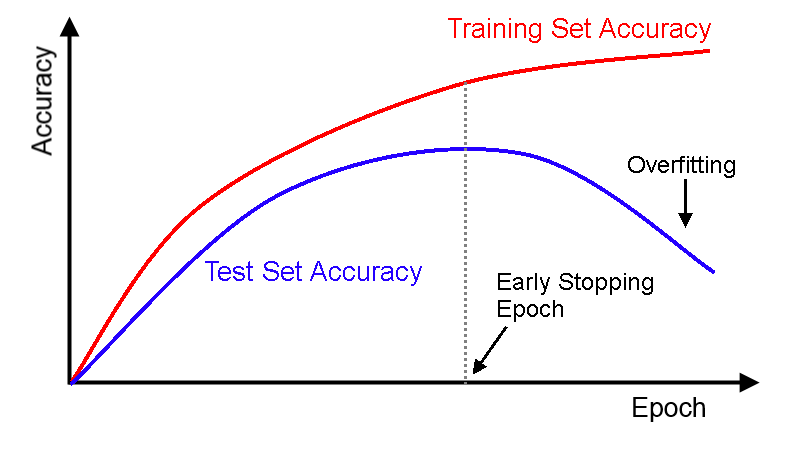

Overfitting: When Flexibility Becomes a Liability

Because neural networks are so flexible, they can:

- memorize noise in the training data

- show excellent performance on training sets

- perform poorly on new, unseen data

This is called overfitting.

The Three Datasets

To develop trustworthy models, we separate data into:

- Training set: Used to learn model parameters

- Validation set: Used to tune model choices and hyperparameters

- Test set: Held out until the end to estimate real-world performance

Data leakage occurs when information from the test set influences model training or tuning.

Data Needs

To generalize well, neural networks typically require:

- Large datasets

- Representative samples across demographics, devices, settings

- High-quality labels

More data helps but does not guarantee generalization if the data is unrepresentative or biased.

3. Convolutional Neural Networks (CNNs) and Image Recognition

Why Standard Neural Networks Struggle with Images

Raw images are large and high-dimensional. A fully connected network would require an impractical number of parameters.

How CNNs Work

CNNs use:

- Convolutional filters (cross-correlation) to detect local patterns (e.g., edges, textures)

- Weight sharing to reuse filters across an image

- Pooling to reduce spatial dimensionality

- Hierarchical feature learning — early layers detect simple patterns, later layers detect complex patterns

This makes them efficient and effective for image analysis.

Interactive CNN Convolution Demo

CNN Convolution: Detecting the Letter "S"

Cross-Correlation Plot

How it works: Click on the filter boxes above to modify the filter pattern. The filter slides across the sentence "ALL YOUR BASE". At each position, we calculate the cross-correlation by multiplying overlapping values and summing them. The plot below shows the cross-correlation value at every position. Higher values indicate a better match with the filter pattern.

Return to VisualDx (as CNN-based tool)

Tools like VisualDx can be framed as CNN-based systems:

- They process clinical images through layers of learned filters

- They output likely diagnoses based on visual pattern associations

- They do not perform causal reasoning

This perspective explains both their strengths (pattern detection) and limitations (lack of mechanistic understanding, vulnerability to distribution shifts).

4. Interpretability and Trust

What Is Interpretability?

In medicine, interpretability means understanding why a model made a particular prediction in terms that clinicians can evaluate and trust.

Interpretability supports:

- Clinical reasoning

- Error detection

- Patient explanation

- Regulatory trust

Neural Networks Are Often Opaque

Neural networks' internal knowledge is distributed across thousands or millions of parameters.

They do not provide human-readable reasoning steps.

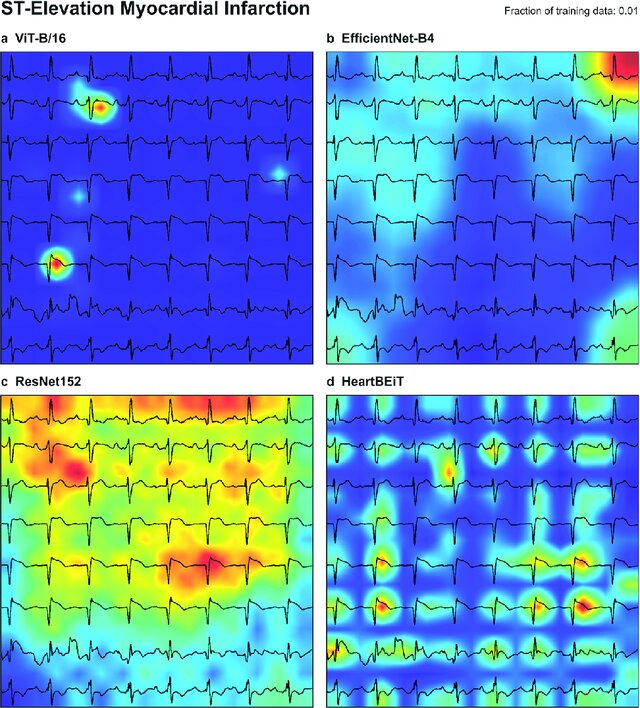

Even techniques like:

- Saliency maps

- Heatmaps

Interactive Saliency Map Visualization

Saliency Map Visualization

💡 Visualization: STEMI Saliency Map

Which model would you trust the most?

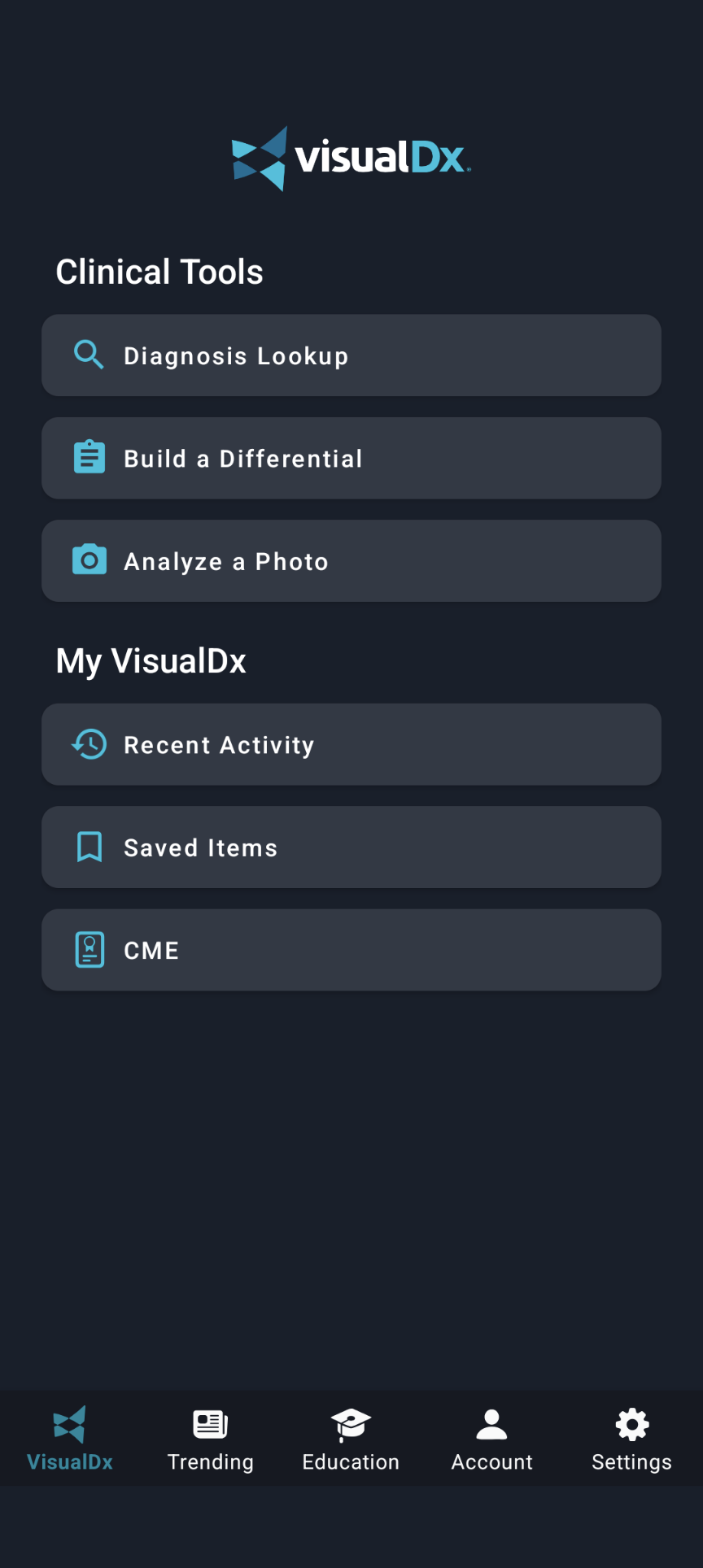

5. VisualDx: CNN for Rash Detection

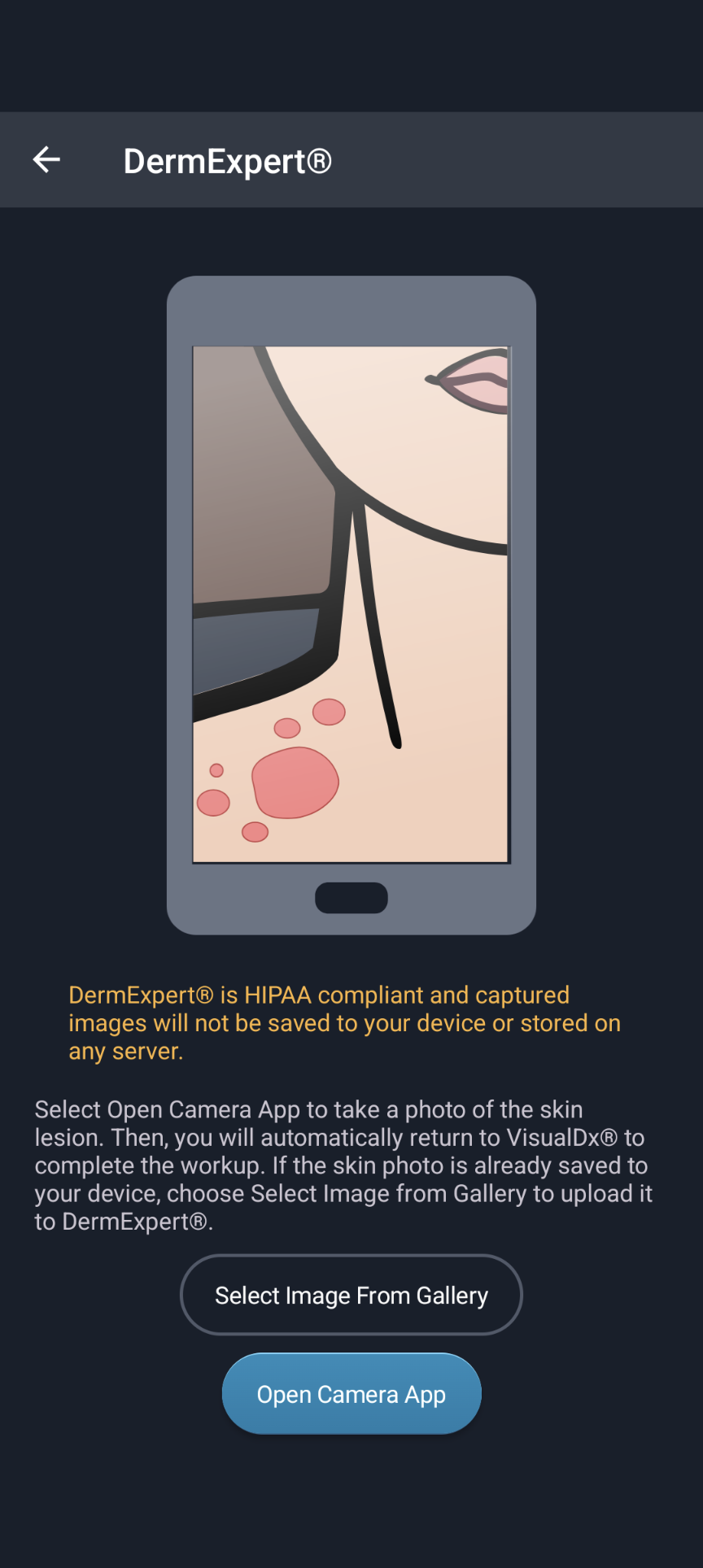

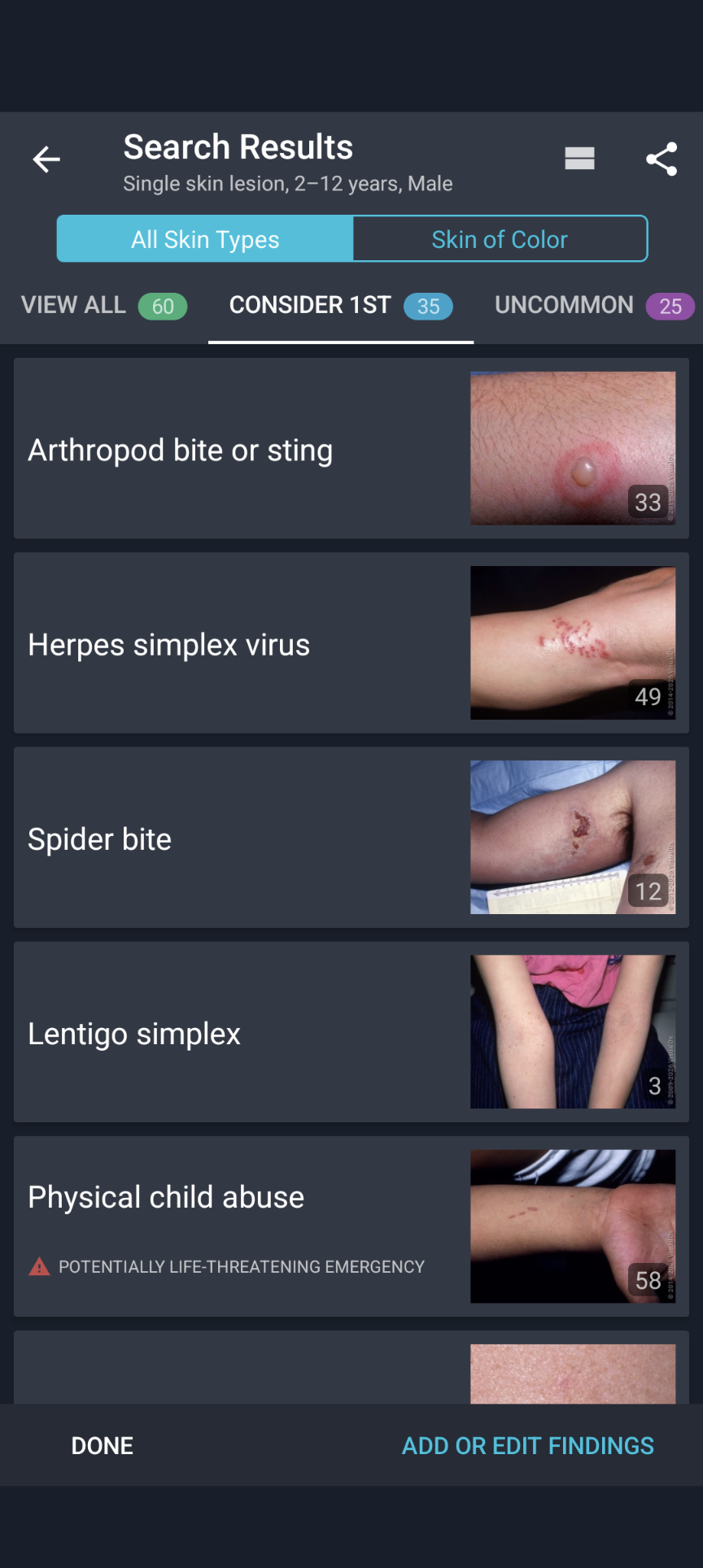

VisualDx demonstrates how CNN-based systems can be applied to clinical image recognition. When a clinician inputs patient characteristics (age, sex, clinical findings) and uploads an image of a skin lesion, the system processes the visual patterns through learned filters and returns a ranked list of possible diagnoses.

The screenshots below illustrate this process: the system analyzes visual patterns in skin lesions to suggest differential diagnoses, similar to how a CNN would process image data through convolutional layers to identify relevant features. This highlights both the practical utility and the importance of understanding the underlying pattern recognition approach—especially given the interpretability challenges discussed earlier.

6. Advantages and Limitations of Neural Networks

Assumptions and Requirements of Neural Networks

When Neural Networks Work Well

✅ Good for:

- Complex pattern recognition tasks (images, audio, text)

- When you have large, high-quality datasets

- When relationships are highly nonlinear

- When interpretability is less critical than performance

- When you need to process high-dimensional data

- When you can leverage transfer learning from pre-trained models

- Real-time prediction tasks once the model is trained

When to Consider Alternatives

❌ Consider other methods when:

- You have limited data (neural networks need substantial datasets)

- Interpretability is critical for clinical decision-making

- You need to understand causal relationships (neural networks learn associations)

- Relationships are simple and approximately linear (linear or logistic regression may suffice)

- You have very few features relative to sample size (simpler models may be more appropriate)

- Computational resources are severely constrained

- Data may not represent the target population (distribution shift risk)

- You need to predict probabilities for binary outcomes with small datasets (logistic regression may be better)

Key Challenges in Clinical Applications

Two critical considerations for neural networks in healthcare:

-

Interpretability vs. Performance Trade-off: Neural networks often achieve superior performance but at the cost of interpretability. In clinical settings, understanding why a model made a prediction can be as important as the prediction itself. Techniques like saliency maps and attention mechanisms provide some insight but don't fully explain the model's reasoning.

-

Distribution Shift and Generalization: Neural networks are highly sensitive to differences between training and deployment data. Changes in imaging equipment, patient populations, clinical protocols, or disease prevalence can significantly degrade performance. This requires:

- Careful validation on diverse populations

- Ongoing monitoring of model performance

- Regular retraining with new data

- Human oversight to catch failures

Both challenges are particularly important in healthcare, where model failures can have serious consequences and where clinicians need to trust and understand AI-assisted decisions.

Summary

Key Takeaways

-

Neural networks learn by adjusting weights to reduce error and can model complex patterns.

-

Their flexibility makes them prone to overfitting; data quality and quantity matter.

-

Neural networks are inherently difficult to interpretability.

-

CNNs enable automated visual pattern recognition, which underlies tools like VisualDx, but they do not understand disease.

Questions for Reflection

-

What are common pitfalls in evaluating neural network-based diagnostic tools?

-

How does overfitting manifest in clinical image models?

-

When might lack of interpretability be unsafe in clinical practice?

-

In what scenarios would CNN-based tools be most helpful, and where would you remain skeptical?